Introduction

Legal scholarship is one of the only areas in academia that allows non-exclusive, non-blind submissions.1 Meaning an author may simultaneously submit the same manuscript to multiple journals, and the reviewers of those journals will be aware of the author’s identity.2 The non-blind aspect of legal scholarship has led to widespread accusations of letterhead bias3 and an equally widespread call for blind article selection.4 Letterhead bias is when journal editors use information about the author—reputation, affiliated institution, prior publications, etc.—as a proxy for article quality and therefore adjust their likelihood of accepting the submission accordingly.5 Because of the private nature of law journals’ review processes, current inquiry into the practice has been primarily limited to anecdotes.6 The lack of any empirical research into the subject has led to bold claims such as the hyperbolic assertion that “[s]omebody from a top 5 school could bluebook a ham sandwich and get it published in a top 10 journal.”7

While most law reviews have not adopted blind review, a few have, providing a unique opportunity to measure the effect blind review has on what type of authors are getting published where. If the authors who are selected to publish in law reviews with a blind selection process are affiliated with institutions that are less prestigious than comparable non-blind law reviews, this would be evidence of the existence of letterhead bias in legal academia and evidence that blind review helps combat it. This Essay reports the findings of a first-of its-kind study that performs such an analysis. As the first quantitative analysis into the subject, the results help shed light on the potential existence and prevalence of letterhead bias and the effectiveness of blind review in minimizing it.

Methodology

The existence of letterhead bias—and the extent to which it affects editorial decisions—is difficult to prove. Simply demonstrating that top law journals are more likely to publish articles from top authors is not only circular but fails to account for the fact that top authors would likely receive a disproportionate number of offers from top law journals regardless of letterhead bias. Fortunately, the existence of a few law journals that implement blind review allows for an objective measurement of letterhead bias.

There are five flagship law journals that utilize blind review. They are from law schools at Harvard;8 Stanford;9 Yale;10 University of California, Irvine;11 and University of Washington.12 The Washington & Lee 2021 Law Journal Rankings13 combined score was used to determine which non-blind law journals were comparable to these blind journals and could therefore be used as the non-blind control group.14 For University of California, Irvine and University of Washington journals, this process was simple. The journals immediately above and below them in the rankings were selected as the comparable non-blind journals. This methodology results in the blind University of Washington Law Review being compared to the Ohio State University Law Review and the Indiana Law Journal. The blind University of California Irvine Law Review is compared to the Missouri Law Review and the DePaul Law Review. Unfortunately, the other three blind journals occupy the top three spots on the Washington & Lee Law Journal Rankings. This is problematic because it means the closest non-blind journals to use as comparators are the fourth, fifth, and sixth-ranked journals, which are not exactly comparable in prestige or objective score to the top three.15 Therefore, the blind Yale Law Review, Harvard Law Review, and Stanford Law Review are considered together and compared to the grouping of the Columbia Law Review, the University of Pennsylvania Law Review, and the Georgetown Law Journal, the fourth, fifth, and sixth-ranked journals, respectively.

In order to measure the average institutional rank of the authors that publish in each journal, every author of an article was recorded, and the U.S. News & World Report peer rank of the law school the author teaches at was recorded.16 No distinction was made between a sole author and a co-author. After authors of non-articles were excluded, the database contained over 500 authors.17

Results

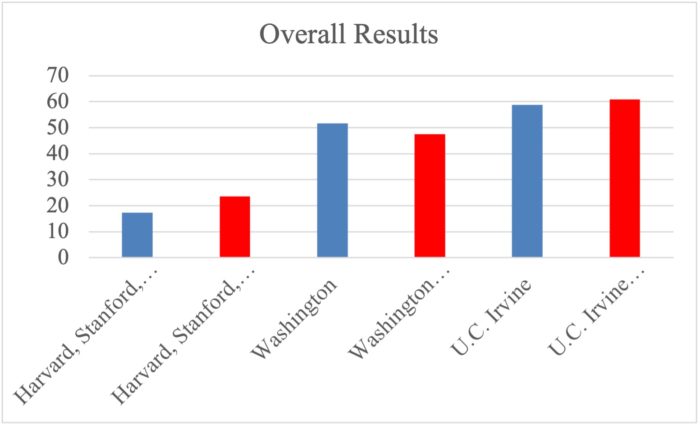

The results provide no evidence to support the existence of letterhead bias nor the claim that blind review combats it. The blind University of California Irvine Law Review averaged an institutional rank of 58.7 for its authors while its two comparator non-blind journals averaged 60.9. Likewise, the blind University of Washington Law Review averaged 51.7 while its two comparator non-blind journals averaged 47.5. The combined average for the blind flagship journals at Harvard, Stanford, and Yale was 17.43 while the three comparator journals for this group averaged 23.47.

Discussion

The result that the blind law journals from Harvard, Stanford, and Yale published authors from higher ranked institutions than the three closest non-blind law journals is of limited value to this study. If the opposite result would have occurred—Harvard, Stanford, and Yale averaged authors from lower ranked institutions—this would be strong evidence that the blind review process does combat letterhead bias, but the inverse result—the result that did occur—does not allow for a determination either way. This is because, regardless of any letterhead bias, it is expected that the more highly ranked journals of Harvard, Stanford, and Yale would naturally publish authors from more prestigious institutions. Authors who received offers from both Harvard and Georgetown are likely to accept the former over the latter.

The results from the other two blind journals—University of California Irvine Law Review and Washington Law Review—provide more insight. Combined, the average author–institutional rank of these blind journals is 55.2, which is not significantly greater than the average for their comparator non-blind journals of 54.2. If letterhead bias were a significant factor in the publication decisions of non-blind journals, one would expect the author–instructional rank from the blind journals to be significantly greater than the similarly situated non-blind journals. The fact that they are not is evidence against the existence of letterhead bias and the notion that blind review helps mitigate the practice.

While there are multiple factors involved in the author-selection process, it is difficult to posit one that would provide an alternative explanation for the findings of this study. One could posit that the results of this study are invalid because journals that implement a blind review process may attract submissions from a different author pool than non-blind law journals. This is perhaps true, but even if true it would only further support the findings of this study, not negate them. The type of authors who would be more attracted to submit to law journals with blind review would naturally be the authors who stand to benefit the most from blind review—i.e., authors from lower-ranked law schools who fear letterhead bias at non-blind journals would put their submissions at a disadvantage. This means that any disparity in the pool of authors that submit to non-blind journals would be that the blind journals would receive more submissions from authors at lesser-ranked law schools. Therefore, regardless of any bias, the blind journals would be expected to naturally have more authors from lesser-ranked law schools. The results from this survey reveal that letterhead bias is not a significant factor in legal academia and that blind review does not significantly mitigate what letterhead bias there may be.

Potential Criticism

This study’s most significant criticism is likely the limited sample size.18 This is an unavoidable consequence of such a study as there are only five blind law reviews. Nevertheless, it is a valid criticism. It, however, only functions to moderate the severity of this study’s results; it in no way rebuts them. The findings of this study—based on a limited sample size—provide empirical evidence to support the claim that letterhead bias is not a significant problem and is not significantly mitigated by blind review.

Relatedly, another criticism could be that the existing evidence in favor of letterhead bias is so overwhelming that, without significant evidence to the contrary, the belief in letterhead bias is justified, and the findings of this study are not emphatic enough evidence to the contrary to rebut. Again, this is a legitimate criticism. The findings presented in this study are just one data point to be considered, and they should be weighed against the evidence in favor of the existence of letterhead bias. Indeed, there is significant statistical, anecdotal, and incentive-based evidence to support the claim that letterhead bias is a significant factor in law journal publishing decisions. Furthermore, common sense would dictate that if letterhead bias exists, blind review should have a mitigating effect on its prevalence.

Statistical Evidence of Letterhead Bias

While not dispositive, the high correlation between law journal rank and law school rank of the author’s institution is consistent with letterhead bias.19 Self-publishing bias—the practice of journals favoring authors from their own institutions20—is also a form of letterhead bias. Therefore, the uncanny prevalence of self-publishing, especially at top institutions, is evidence of letterhead bias. For example, in 2019, the Virginia Law Review had a 24% self-publishing rate.21 It would be a highly peculiar coincidence if, out of all the hundreds of submissions from top-fifty law schools the Virginia Law Review receives, 24% of the best just happened to be from the University of Virginia Law School.

Incentive-Based Evidence of Letterhead Bias

Ronald J. Krotoszynski, Jr., reasoned “[t]he rules of the game strongly suggest . . . that factors unrelated to pure merit (however defined) will play an important role in a given law review’s publication decision.”22 For example, “author’s institutional affiliation [is] a convenient proxy for gauging the probable merit of a submission.”23

Since student editors have minimal experience with evaluating legal scholarship, Richard A. Posner illuminated the immense incentive for student editors to engage in letterhead bias by comparing it to general consumer behavior: “[T]hey do what other consumers do when faced with uncertainty about product quality; they look for signals of quality or other merit. The reputation of the author, corresponding to a familiar trademark in markets for goods and services, is one, and not the worst.”24

Anecdotal Evidence of Letterhead Bias

One scholar writing on the subject concluded that the practice of letterhead bias is “generally assumed” in legal academia.25 Surveys of student editors helped confirm this. A 2008 survey concluded:

Articles Editors like to publish articles from well-known and widely-respected authors. . . . Articles Editors consider an author’s reputation because publishing work by respected authors is one way to increase a journal’s prestige . . . . [And] journal prestige, rather than the publication of quality legal scholarship, may be the most significant driver of publication decisions.26

A 2007 survey found “[a] majority of respondents from nearly every school segment indicated they are influenced by the law school where an author teaches.”27 And a 2008 survey concluded that student editors “use author credentials extensively to determine which articles to publish.”28 The explicit submission requirement from many law journals to include a curriculum vitae also supports the presence of letterhead bias.29

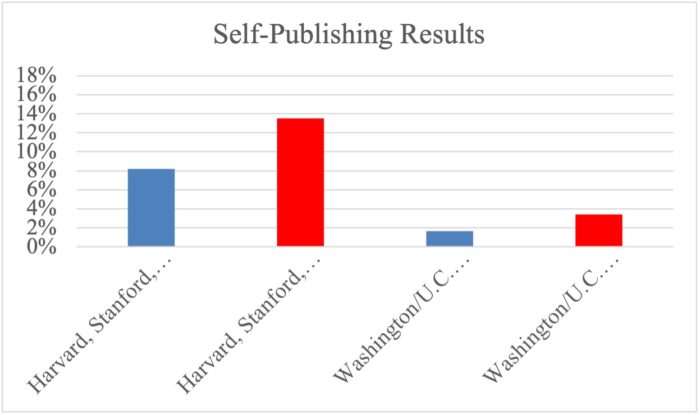

Blind Review and Self-Publishing

The methodology for this study allows for an additional analysis into how blind review affects legal scholarship. Namely, how it affects the often-criticized practice of self-publishing.30 Self-publishing occurs when a law professor publishes in the journal at his institution of employment.31 Using the same comparisons of law journals from earlier in this study, there is some evidence to suggest that blind review may diminish the practice of self-publishing. The blind journals have lower rates of self-publishing than the comparable non-blind journals. This result, however, should be treated with some skepticism because the limited sample size is even more relevant here than in the overall result of this study. This is because a single self-published article can greatly affect the self-publishing rate of a journal in this study.

Blind Review Skepticism

The issue of self-publishing also calls into question the feasibility of 100% blind review.32 Student editors of law journals are likely aware of the research projects of the professors at their institution. These professors likely discuss their research in the classroom and often employ students as research assistants. Simply removing the author’s name from a submission would not ensure that student reviewers are blind to the author’s identity. A similar problem may exist even with authors from other institutions. Some authors are well-known experts in their field, or their research may be a continuation of prior research, either of which could alert reviewers as to the author’s identity. Furthermore, even with blind review, the author’s identity is collected and known to the editor; it is only the reviewers who are blind.33 Therefore, even with blind review, there is the risk that the editor will—subconsciously or otherwise—take into consideration the author’s identity when deciding whom to assign to review the submission.

Conclusion

This study’s methodological limitations do not allow for the ultimate determination to be made as to how blind review affects letterhead bias. Regardless, the results provide the first-ever empirical data on the subject. The results support the notion that letterhead bias is not a significant problem in legal academia and that blind review does not significantly alter publication decisions. This research, however, only provides a singular data point that must be considered in light of the contrasting evidence that letterhead bias does exist.

The results of this study call for replication in future research. In the event more law journals implement blind review, a replication study would allow for more data to be analyzed and more confidence in the conclusion. Even if blind journals stay limited to the five from this study, a replication study done at least two years from now would effectively double the sample size of the present study, thus allowing for more confidence in the results. Additionally, a future study implementing a similar methodology could analyze the potentiality for gender discrimination as a consequence of non-blind article review.34

a. Powell Endowed Professor of Business Law, Angelo State University.

1. Albert H. Yoon, Editorial Bias in Legal Academia, 5 J. Legal Analysis 309, 311 (2013).

2. Id.

3. “Everyone has a sense that letterhead bias exists but no one can prove it.” Michael J.Z. Mannheimer, Anecdotal Evidence of Letterhead Bias, PrawfsBlawg (May 27, 2011, 1:22 PM), https://prawfsblawg.blogs.com/prawfsblawg/2011/05/anecdotal-evidence-of-letterhead-bias.html [https://perma.cc/2G8G-7V4X]..

4. Stephen Thomson, Letterhead Bias and the Demographics of Elite Journal Publications, 33 Harvard J.L. & Tech. 203, 210 (2019) (“Legal academics generally favor a system of blind review in article selection . . . . Most of those academics also want the entire selection process to be blind.”); see also James Lindgren, An Author’s Manifesto, 61 U. Chi. L. Rev. 527, 538 (1994) (“Reform-minded student reviews should [c]onceal the author’s identity, gender, and institutional affiliation from those selecting the articles.”); Barry Friedman, Fixing Law Reviews, 67 Duke L.J. 1297, 1349 (2018) (“Review of articles ought to be blind.”).

5. Kevin M. Yamamoto, What’s in a Name? The Letterhead Impact Project, 22 J. Legal Stud. Educ. 65, 65 (2004); see also Fabio Arcila, Judging Scholarship, or, Would You Kill for Blind Review?, PrawfsBlawg (Dec. 7, 2009, 9:12 AM), https://prawfsblawg.blogs.com/prawfsblawg/2009/12/judging-scholarship-or-would-you-kill-for-blind-review.html [https://perma.cc/SQ99-9MUW].

6. Dan Subotnik & Glen Lazar, Deconstructing the Rejection Letter: A Look at Elitism in Article Selection, 49 J. Legal Educ. 601, 610 (1999) (providing two such anecdotal examples). In one, a professor from the University of Kentucky Law School received no offers when submitting her article to fifty law reviews. Id. Then, while visiting William and Mary Law School she resubmitted using their letterhead. Id. She received five inquiries in the first week. Id. The second anecdote is from a professor who engaged in a mass submission of an article, half of which were conducted on Chicago-Kent letterhead and half of which were on University of Chicago letterhead. Id. The latter group received offers from Penn and Northwestern, while the former received an offer from Arizona. Id.

7. John C, Comment to Are Law Reviews Guilty of Letterhead Bias?, Faculty Lounge (Mar. 6, 2008, 6:25 PM), https://www.thefacultylounge.org/2008/03/are-law-reviews.html [https://perma.cc/5Z4Y-5U4Z].

8. Submit, Harv. L. Rev., https://harvardlawreview.org/submissions/ (last visited Nov. 28, 2021) [https://perma.cc/2UX6-T274] (“To facilitate our anonymous review process . . . .”).

9. Article Submissions, Stan. L. Rev., https://www.stanfordlawreview.org/submissions/article-submissions/ (last visited Nov. 28, 2021) [https://perma.cc/4QKZ-6UG3] (“All voting Articles Editors complete their reads without knowledge of the author’s identity, institutional affiliation, or any other biographical information.”).

10. Volume 130 Submission Guidelines, Yale L.J., https://www.yalelawjournal.org/files/GeneralSubmissionsGuidelines130_8e6kes3j.pdf (last visited Nov. 28, 2021) [https://perma.cc/29TA-4DLV] (“We review manuscripts anonymously, without regard to the author’s name, prior publications, or pending publication offers.”).

11. Scholar Submissions, U.C. Irvine L. Rev., https://www.law.uci.edu/lawreview/submissions/scholar-submissions.html (last visited Nov. 28, 2021) [https://perma.cc/7LRV-GBEY] (“The Law Review requests that all manuscript files by anonymized, that is, stripped of names and identifying information, to preserve the Law Review’s blind review process.”).

12. Submissions, Wash. L. Rev., https://www.law.uw.edu/wlr/submissions (last visited Nov. 28, 2021) (“Washington Law Review uses an anonymous review process to reduce implicit bias when selecting articles.”).

13. W&L Law Journal Rankings, Wash. & Lee Sch. L., https://managementtools4.wlu.edu/LawJournals/Default.aspx (last visited Nov. 28, 2021) [https://perma.cc/9JCA-P7N9].

14. Washington & Lee Law Journal Rankings were utilized rather than U.S. News & World Report Law School Rankings because they are based on quantifiable standards rather than peer opinion. But note that the U.S. News & World Report Law School rankings are the standard for determining an institution’s prestige, which may factor into letterhead bias. Thomson, supra note 4, at 207.

15. For example, at the time of writing, Yale Law Review and Harvard Law Review have combined scores of 100 and 99.2, respectively, in the Washington & Lee Law Journal Rankings. The average combined score of the fourth, fifth, and sixth-ranked journals is only 71.7. W&L Law Journal Rankings, supra note 13.

16. The choice to use the peer rank instead of the overall rank was a deliberate one. Law schools outside of the top 150 are given a rank of “Tier 2” in the overall rankings. In the peer rankings, every American Bar Association-accredited law school is given a numerical ranking. Paul Caron, 2022 U.S. News Law School Peer Reputation Rankings (And Overall Rankings), TaxProf Blog (Mar. 30, 2021), https://taxprof.typepad.com/taxprof_blog/2021/03/2022-us-news-law-school-peer-reputation-rankings-and-overall-rankings.html [https://perma.cc/4DCB-8Y5K].

17. Authors of comments, notes, book reviews, responses, forwards, and in memoriams were excluded from consideration and are not reflected in the sample size of over 500.

18. For many types of research, a sample size of over 500 would be more than sufficient. When trying to extrapolate causation from nuanced differences such as in this study, however, a larger sample size would have allowed for more confidence in the results.

19. See Thomson, supra note 4, at 215 (providing a linear regression model for journal ranking versus average author’s affiliated law school’s ranking). This study concluded that “[w]hen considered in the context of the existing literature and empirical research, this finding provides strong statistical grounding for establishing the phenomenon of letterhead bias . . . .” Id. at 259.

20. Id. at 221 n.78.

21. Id. at 224.

22. Ronald J. Krotoszynski, Jr., Commentary, Legal Scholarship at the Crossroads: On Farce, Tragedy, and Redemption, 77 Tex. L. Rev. 321, 330 (1998).

23. Id. at 329.

24. Richard A. Posner, The Future of the Student-Edited Law Review, 47 Stan. L. Rev. 1131, 1133–34 (1995).

25. Jason P. Nance & Dylan J. Steinberg, The Law Review Article Selection Process: Results from a National Study, 71 Alb. L. Rev. 565, 571 (2008).

26. Id. at 612–13.

27. Leah M. Christensen & Julie A. Oseid, Navigating the Law Review Article Selection Process: An Empirical Study of Those with All the Power—Student Editors, 59 S.C. L. Rev. 175, 188 (2007).

28. Nance & Steinberg, supra note 25, at 584.

29. For example, reviewing the submission standards for the top three non-blind law journals reveals that they all explicitly require the author to submit his curriculum vitae. Submissions Instructions, Colum. L. Rev., https://columbialawreview.org/submissions-instructions/ (last visited Nov. 28, 2021) [https://perma.cc/G2DQ-TQQH] (For book reviews, “[e]mail submissions should include the manuscript, the author’s CV, and a brief cover letter. . .”); Submissions, Univ. Penn. L. Rev., https://www.pennlawreview.com/submissions/ (last visited Nov. 28, 2021) [https://perma.cc/H22A-GRAQ] (“Submissions should be in Microsoft Word format and include a short abstract and the author’s CV.”); Submit to The Georgetown Law Journal, Geo. L.J., https://www.law.georgetown.edu/georgetown-law-journal/submit/submit-articles/ (last visited Nov. 28, 2021) [https://perma.cc/4KW8-ZJMX] (“Authors are asked to provide an abstract and CV with their submissions.”).

30. Michael Conklin, It’s Not What It Looks Like: How Claims of Self-Publishing Bias in Legal Scholarship Are Exaggerated, 39 Quinnipiac L. Rev. 1, 2 (2020). Critics of self-publishing point to the following harms caused by the practice: lack of diversity, the abusive practice of “trading up” to better journals, negative impact on journal credibility, diminished integrity in the process, reduced scholarship quality, and coercion faced by student editors. Id. at 13–17.

31. Thomson, supra note 4, at 221 n.78.

32. Conklin, supra note 30, at 22.

33. “Blind review” in law journals more specifically refers to double-blind review. This is where the reviewer and author are unaware of the identity of the other. It is only in triple-blind review that the editor is also unaware of the identity of the author. Hilda Bastian, The Fractured Logic of Blinded Peer Review in Journals, Absolutely Maybe (Oct. 31, 2017), https://absolutelymaybe.plos.org/2017/10/31/the-fractured-logic-of-blinded-peer-review-in-journals/ [https://perma.cc/APL4-VJBX]. For example, the Stanford Law Review explains, “Only the Senior Articles Editor knows the identity of the author.” Article Submissions, supra note 9.

34. Namely, if the ratio of female to male authors in blind law journals is greater than that found in comparable non-blind law journals, this would be strong evidence that female authors are harmed by editors being made aware of their gender.

The full text of this Article is available to download as a PDF.